This week we continue our study of tensor properties with additive and spectral decompositions of tensors. We shall also look at orthogonal tensors. The slides are presented in the video

Here are the actual slides we will use in class. It begins with a repeat of some of last week’s outstanding issues that you ought to have gone through on your own. These are: Products of determinants, trace of compositions and scalar product of tensors. You must be current on these in order to understand this weeks menu.

The five topics covered here include:

1. The tensor set as a Euclidean Vector Space,

2. Additive Decompositions

3. The Cofactor Tensor, its geometric interpretation

4. Orthogonal Tensors

5. The Axial Vector

These are vocalized in the above Vimeo video and the downloadable slides are here

[gview file=”http://oafak.com/wp-content/uploads/2019/09/Week-Eight-.pdf”]

Wooow, thanks so much for the slide videos , it makes learning easier, my God continue to bless. ❤️❤️❤️

Is there a relationship between orthogonal tensor and identity tensor since it transforms a vector to the same vector

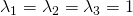

Yes it is orthogonal – in fact a proper orthogonal tensor – a rotation through angle about every axis. Furthermore, its three eigenvalues,

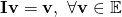

about every axis. Furthermore, its three eigenvalues,  , Every vector is an eigenvector to the Identity Tensor as,

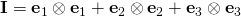

, Every vector is an eigenvector to the Identity Tensor as,  and its unique eigen-projectors are made of dyads from itself:

and its unique eigen-projectors are made of dyads from itself:  .

.

Thank you sir

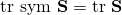

Since

Can we as well say that that

As in

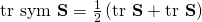

Positively, absolutely! That is correct! Furthermore, the trace of the skew part,

Okay thank you sir

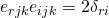

Sir on the last part about the axial vector -2dkaVk = EaijQij

where d is the Kronecker delta

E is the Levi civita

Q is omega

You arrived at vi = -1/2EijkQjk

I understand that the Kronecker delta substituted the k for a(alpha) and it is a dummy, I also understand how the opposite side came to be but how did Q(omega) come to have jk

Sorry for the poor typing sir

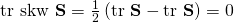

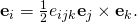

S1.31: It was established that .

.

S2.33: We established the fact that

S4.3: We transferred the LeviCivita to the other side in the above equation and showed that,

This is essentially the same argument as the one here.

The solution for this,shown in week 3 pg29 using the levi civita.

I have checked that any 2×2 matrix taken from the Original 3×3 matrix. Its determinant(of the 2×2) is the final answer. I tested it using its application in Week 8 pg27. And i also tested that if there are two shared indexes it works. Since you would use your kronecker substitution.

So does this rule apply generally??

The rules that apply generally are in the summation convention. The matrix rules you are developing are not correct. The actual matrix representation of is

is  which gives 27 components! Not

which gives 27 components! Not  ! The 2D matrix representation you are trying to imagine does not apply to tensors of order than three or higher! The equation you have above here are also not correct in that you cannot take the dot product

! The 2D matrix representation you are trying to imagine does not apply to tensors of order than three or higher! The equation you have above here are also not correct in that you cannot take the dot product  because the two operands you are working with are scalar components of the Levi-Civita tensor. They are NOT vectors!

because the two operands you are working with are scalar components of the Levi-Civita tensor. They are NOT vectors!

This is a course beginning with vector and tensor analysis. Spend your effort wisely in understanding the principles you are being taught before you begin to look for patterns to match. You are yet to understand something as simple as the volume of a parellelpiped that was taught in the first class! This, and other basic ideas, should be mastered first!

Please sir how do I go about solving this:

Areo Ajibola

Systems Engineering

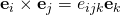

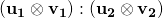

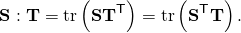

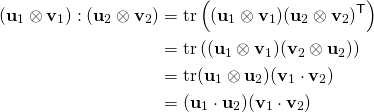

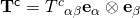

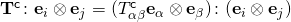

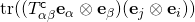

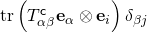

The product of two or more dyads is treated in S7.17. The scalar product of any two tensors and

and  can be found:

can be found:

(1)

Accordingly,

(2)

Good day sir.

In page 19 of Chapter 3, there was an expression:

c

: ( ⊗ ) =

I don’t understand how the inner product, which is meant to be the trace of the transpose of(

c multiplied by the dyad) equaled the constant

Your question is not clear. You did not write a meaningful expression looking like anything on page 19, Chapter 3. I will be able to help you once you are clearer and specific. , we have,

, we have,  . Now take the inner product of both sides with

. Now take the inner product of both sides with  you immediately have,

you immediately have, Which is the same as

Which is the same as  . It can be further simplified to

. It can be further simplified to  . And, finally,

. And, finally,

It is quite possible you are thinking of page 19 of Chapter 2. In that case I have the following to say:

Start from the component wise expression for the cofactor of tensor

There is more information about the rest of this issue in my response to Abdulazeez Opeyemi Lawal in Week Nine. Also check out S8.24-27 for more on this.

Sir, the trace of a deviatric and skew tensor is zero, does this mean all it’s diagonal elements are zero or can be a combination like (0,-1,1)?

It does not mean that every element is zero. Trace is the sum of elements. For a skew tensor, each element is zero; for a deviatoric tensor, only the sum is zero. In both cases, the trace is zero.